Deploying Azure AI Hub, project and a model with terraform

Have you ever wanted to deploy an Azure AI model with Terraform? Here's how to do it. You'll end with an Azure AI Hub, Azure AI Project and a model deployed from Azure AI Studio

I've recently been working on a proof of concept which required using Azure OpenAI.

Last time I did this I clicked through the portal to create an Azure AI Hub. Inside of Azure AI studio I created a project and then deployed a model from the deployments page.

This time the solution I was working on was going to be deployed into production so I wanted it to be 100% configured with code, in order to be self documenting and repeatable.

I found it a lot harder than I expected to get it working.

Initially I tried the Azure Verified Module (AVM) for openai: Azure/terraform-azurerm-openai.

This worked but it deploys the legacy resource type "Azure OpenAI", if you open the "Azure OpenAI studio" linked from the resource you are recommended to instead use the new approach with the AI Hub.

This blog post will make extensive use of the AzAPI provider due to:

- hashicorp/terraform-provider-azurerm#25857

- hashicorp/terraform-provider-azurerm#25858

- hashicorp/terraform-provider-azurerm#25859

Anyway lets get to it:

- Create a file called

providers.tf:

terraform {

required_version = ">=1.0"

# Make sure to configure a backend to save the state

# backend "azurerm" {}

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~>3.0"

}

azapi = {

source = "Azure/azapi"

version = "~>1.0"

}

}

}

provider "azurerm" {

features {}

}

provider "azapi" {

}

providers.tf

- Create a file called

variables.tf:

variable "location" {

default = "UK South"

}

variables.tf

- Create a file called

main.tf:

resource "random_pet" "rg_name" {

prefix = "this"

}

resource "azurerm_resource_group" "this" {

location = var.location

name = random_pet.rg_name.id

}

data "azurerm_client_config" "current" {

}

resource "random_string" "this" {

length = 8

special = false

upper = false

}

resource "azurerm_storage_account" "this" {

name = random_string.this.result

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

account_tier = "Standard"

account_replication_type = "LRS"

allow_nested_items_to_be_public = false

}

// KEY VAULT

resource "azurerm_key_vault" "this" {

name = random_string.this.result

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

tenant_id = data.azurerm_client_config.current.tenant_id

sku_name = "standard"

purge_protection_enabled = false

}

// Optional application insights

resource "azurerm_application_insights" "this" {

name = random_string.this.result

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

application_type = "web"

}main.tf

- Create a file called

hub.tf:

resource "azapi_resource" "hub" {

type = "Microsoft.MachineLearningServices/workspaces@2024-04-01-preview"

name = "hub"

location = azurerm_resource_group.this.location

parent_id = azurerm_resource_group.this.id

identity {

type = "SystemAssigned"

}

body = jsonencode({

properties = {

description = "Your description"

friendlyName = "Display name for Hub"

storageAccount = azurerm_storage_account.this.id

keyVault = azurerm_key_vault.this.id

applicationInsights = azurerm_application_insights.this.id

}

kind = "Hub"

})

}

hub.tf

- Create a file called

project.tf:

resource "azapi_resource" "project" {

type = "Microsoft.MachineLearningServices/workspaces@2024-04-01-preview"

name = "my-project"

location = azurerm_resource_group.this.location

parent_id = azurerm_resource_group.this.id

identity {

type = "SystemAssigned"

}

body = jsonencode({

properties = {

description = "Description"

friendlyName = "Display name"

hubResourceId = azapi_resource.hub.id

}

kind = "Project"

})

}project.tf

- Create a file called

ai-services.tf:

resource "azapi_resource" "AIServices" {

type = "Microsoft.CognitiveServices/accounts@2023-10-01-preview"

name = "my-ai-services"

location = azurerm_resource_group.this.location

parent_id = azurerm_resource_group.this.id

identity {

type = "SystemAssigned"

}

body = jsonencode({

name = "my-ai-services"

properties = {

apiProperties = {

statisticsEnabled = false

}

customSubDomainName = random_string.this.result

}

kind = "AIServices"

sku = {

// https://learn.microsoft.com/en-us/azure/analysis-services/analysis-services-overview#europe

name = "S0"

}

})

response_export_values = ["*"]

}

resource "azapi_resource" "AIServicesConnection" {

type = "Microsoft.MachineLearningServices/workspaces/connections@2024-04-01-preview"

name = "my-ai-services"

parent_id = azapi_resource.hub.id

body = jsonencode({

properties = {

category = "AIServices",

target = jsondecode(azapi_resource.AIServices.output).properties.endpoint,

authType = "AAD",

isSharedToAll = true,

metadata = {

ApiType = "Azure",

ResourceId = azapi_resource.AIServices.id

}

}

})

response_export_values = ["*"]

}

ai-services.tf

- Create a file called

deployment.tf:

You'll probably want to deploy with all the files up till now and then explore Azure AI Studio to see which model you want to use and see which version is available in your region, this document is good for OpenAI

resource "azurerm_cognitive_deployment" "model" {

name = "gpt-4"

cognitive_account_id = azapi_resource.AIServices.id

model {

format = "OpenAI"

name = "gpt-4"

version = "0125-Preview"

}

scale {

type = "Standard"

capacity = 10

}

rai_policy_name = "Microsoft.DefaultV2"

}deployment.tf

- Permissions:

You'll likely want to provide permissions to the resource so that it can be used, to provide permission to yourself you can do:

resource "azurerm_role_assignment" "identity_access_to_ai_services" {

principal_id = data.azurerm_client_config.current.object_id

scope = azapi_resource.AIServices.id

role_definition_name = "Cognitive Services OpenAI User"

}ai-permissions.tf

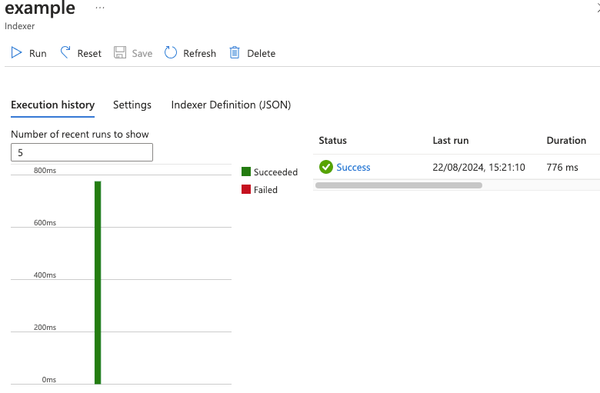

Deploy your new AI project

First you'll want to run terraform init

You should see output that ends with something like:

...

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.Then run a plan to see what will be created:

terraform plan -out tfplanReview the plan and if it looks good approve it:

terraform apply "tfplan"Success

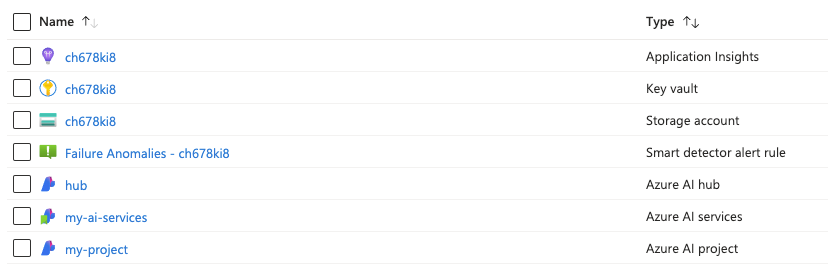

You should now have:

- a Key Vault

- an Application insights

- an Azure AI Hub

- an Azure AI Project

- a model that you can test with Azure AI Studio

The resources should look something like this:

Here is a GitHub repository with all of this included: